Research performance by academics and organisations would be better assessed using graphical “profiles” that properly contextualise data on measures such as citations rather than over-simplified metrics such as journal impact factors.

That is the view put forward in a new report by a team from the Institute for Scientific Information at Clarivate Analytics, one of the main organisations that offer universities the ability to assess data on research performance.

The paper, Profiles not Metrics, argues that a number of the most commonly used research metrics suffer “from widespread misinterpretation” as well as “irresponsible, and often gross, misuse”.

“Single-point metrics have value when applied in properly matched comparisons, such as the relative output per researcher of similar research units in universities,” it says.

But such information “can be misused if it is a substitute for responsible research management, for example in academic evaluation without complementary information, or even as a recruitment criterion”.

One section of the paper looks at average citation impact, a widely used metric in assessing the performance of academics and universities, by showing how it can be skewed by a small number of high-performing papers.

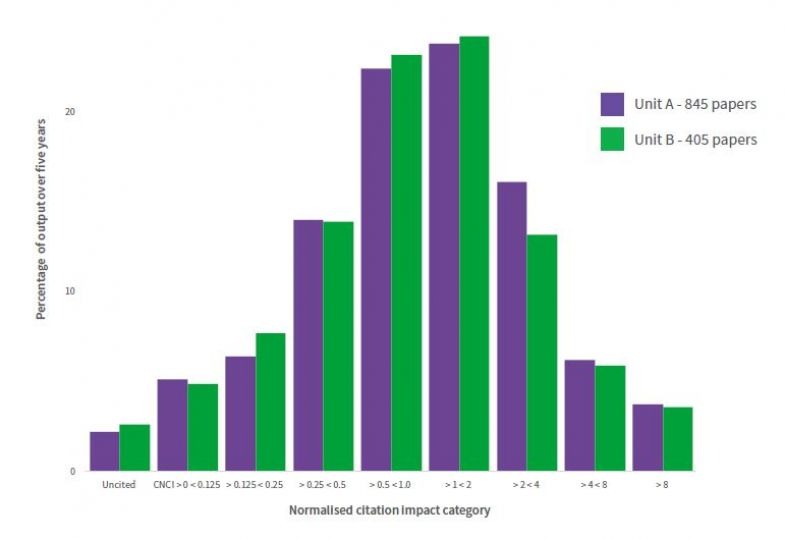

Instead, a histogram showing what proportion of papers published by an organisation over a given time achieved different levels of citation impact can give a clearer indication of performance compared with others. It provides an example of two unnamed biomedical research units, which had very different average citation impacts but very similar impact profiles.

Impact profile of two UK biomedical research units over five years

Overall normalised citation impact for unit A = 1.86 and for unit B = 2.55 where the world average = 1

The paper argues that such an approach can provide “routes to answers for research management: where are the collaborative papers; do the same people produce both high and low cited material; did we shift across time?”

It also suggests a similar alternative for journal impact factors, which look at the average citations achieved by a journal and are often used as a way to estimate the relative merits of the journals where academics are publishing research.

“The problem is that JIF, developed for responsible use in journal management, has been irresponsibly applied to wider research management,” the authors of the paper say.

“Putting JIF into a context that sets that single point value into a profile or spread of activity enables researchers and managers to see that JIF draws in a very wide diversity of performance at article level.”

Other metrics critiqued by the paper include the h-index, where the amount of research produced by an academic at a certain level of citation is reduced to a single number. It suggests that a chart that more fully shows a researcher’s citation profile over time would provide a “fair and meaningful evaluation”.

Meanwhile, the paper also suggests that a “research footprint” that details the performance of a university across different disciplines or types of data might be more informative than rankings that attempt to boil down an institution to a single number.

Jonathan Adams, director at the ISI, said: “For every over-simplified or misused metric there is a better alternative, usually involving proper and responsible data analysis through a graphical display with multiple, complementary dimensions. By placing data in a wider context, we see new features...understand more and improve our ability to interpret research activity.”

Find out more about THE DataPoints

THE DataPoints is designed with the forward-looking and growth-minded institution in view

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber? Login