Many people would like to forget 2020, but I had a good year – professionally, at least. I evolved from being a professor who stands in front of students and spins an entertaining lesson on the fly into a disciplined, one-person educational production company. I learned to script, present and edit high-quality video, and to restructure an entire syllabus for new delivery modes. I discovered the amazing features of Moodle and organised complex multi-participant tutorials in chat rooms. Without doubt, embracing technological change in 2020 has improved my teaching.

Like many, I initially felt a positive side to the pandemic. Systems theory tells us that crises favour fast and flexible actors, and the early days of the pandemic flowed with creativity, as resilient, dogged teachers seized the opportunity to experiment. We made conference phone calls, video-links and audio recordings; we built web pages and ad hoc blogs; we repurposed existing tools and invented new ones.

That’s nothing new. Tools widely adopted today for business, software development and teaching were pioneered in universities such as the Massachusetts Institute of Technology and UCL. As teachers, we built systems to connect with our students. We administered and adapted them ourselves.

When the crunch came in 2020, we moved teaching online, saying: “Let’s do it our way and put our best foot forward!” So, for a few innovative months, a thousand flowers bloomed. It was like the emergence of the World Wide Web in the 1990s all over again. Ideas from those dull “technology and teaching” conferences we had slept through suddenly acquired urgent relevance. Disinhibited by sheer necessity, we experimented with blended learning and reversed classrooms.

Freedom and opportunity knocked because the crisis temporarily disabled the micro-managing control centres of what political scientist Benjamin Ginsberg describes in his 2011 book, The Fall of the Faculty, as “the all-administrative university”. With the cat away, the mice could play. Diversity, resilience and optimism flourished. I felt a certain euphoria in taking command of a bad situation. As the unique and individual talents of teachers shone forth and we took back ownership of our teaching practices, our students really appreciated our fresh, creative efforts and our commitment to improving on them.

The atmosphere felt particularly pregnant with possibility in the warm late June days, as the first UK lockdown wound down and we turned our thoughts to how to approach the autumn term. That was my “golden moment”. Yet, as security engineer Bruce Schneier warns us, there is only a short time lag between technological change favouring versatile actors and established power retaking the advantage.

I want to argue here that universities are fostering abusive technologies that replace empowerment with enforcement. There are worries, and much evidence, that we are already giving away too much control to Big Tech companies, which not only have vast appetites for our data, but also harbour ambitions to usurp the role of universities. Google offers courses with certificates it considers equivalent to three-year bachelor’s degrees to people it is hiring, for instance. And US universities such as Duke partner with Google Cloud to deliver large parts of their curriculum as outsourced digital education.

The problem is not that these services are poor substitutes for in-person education. On the contrary, they are very good at providing a narrow range of outcomes: namely, consistent, efficient training and testing. But that is not the same thing as education.

Annually, Google is now offering 100,000 “need-based” scholarships to take one of a new suite of “Google Career Certificates” that will “help Americans get qualifications in high-paying high-growth job fields”. And while the online course provider Coursera was founded at Stanford and professes to partner with more than 200 leading universities, it is easy to predict that, like Netflix and Amazon, it might ultimately decide to cut out the middle man and generate its own content for its claimed 77 million learners. To save ourselves from capture by such online giants, with whom we cannot possibly compete on scale and cost, we must leverage the one unique asset we still have: our humanity. Right now, sadly, we seem to be doing much to help technology destroy that.

One important element of this is the way that information and communications technology (ICT) within universities is shifting away from a service culture that supports diverse and creative teaching to creating opaque power centres that set and enforce lockstep policy. The pandemic has thrown that tension into sharper relief. Administrators’ priorities were torn between allowing staff freedom – so they could deliver high-quality teaching by engaging with students on their own initiative – and maintaining control. In some universities, computing equipment sat idle in labs because, rather than opening virtual private networks (VPNs) for students isolated at home, administrators doubled down on security policies. It was easier and cheaper to purchase virtual private servers (VPSs) online. However, these Cloud packages subtract from the expertise of teachers and the diversity of the student experience, reducing professors to help-desk assistants.

A major factor is dwindling expertise in university ICT centres. Poor wages and outsourcing have reduced the once prestigious and challenging role of system administrator to box-checking. Automated, faceless “issue ticketing” systems are run from overseas call centres. Consolidation into ever more centralised, vertically integrated systems and dislocation into the Cloud has taken away control of our IT. Full-time university staff who can create and maintain systems or help us with faults and queries have largely disappeared. What remains is a negative permissions culture.

This may mean that a spirit of possibility, in which we teach students to be innovative and creative, is under threat. When universities ran on Libre software, teachers could install their own. Today, an overuse of proprietary software with complex licensing arrangements creates too much process. If it takes too long to get software installed, innovation dies. ICT hubs began to treat shared institutional resources as “their network”, as opposed to “our network”. Extrapolating this trend under conditions of aggressive outsourcing and remote working, we will soon all be subjects of “someone else’s network”, generally owned by a Big Tech company.

Yet what has become clear during the pandemic is that universities that retained their IT independence were at an advantage. Those able to deploy open-source servers using idle bandwidth and spare IP addresses pushed ahead with remote video meetings and teaching. Meanwhile, those without expertise left staff and students to fumble among a collection of privacy-invasive, mutually incompatible corporate tools such as Zoom, Skype and Teams, all of which have since revealed serious privacy and security issues. The pandemic rewarded tech giants who rushed into the vacuum left by absentee technical administration.

To imagine what this portends for mass education, look at chess. On 16 October 2020, The Guardian reported on an emerging crisis in professional online competition. To maintain the viability of remote matches, players are locked in rooms surrounded by surveillance; their eye motion and slightest body movements are monitored, while catheters are fitted so they can take a pee without moving.

This may sound understandable when prizes of £10,000 are at stake. But higher education is also a high-stakes game, in which UK students invest tens of thousands of pounds. Most of us recognise that a small number will do anything to get their degree certificate.

As a result, awkward and often contradictory rituals around plagiarism abound. Outside the computer science department, I doubt that many academics have processed the extraordinary implications of the latest type of machine learning (known as GPT-3). Today, my students can choose between several purveyors of essays and coursework written specifically for their degree. These are churned out by the thousand, products of AI-assisted academic sweatshops. (Astonishingly, I found a site in India that specifically sells papers for my course, naming me as the professor and quoting the course code. I am internet famous! – although I was somewhat insulted to find the going rate for a B grade on my course is only $19.99: I need to up my game.)

It is no wonder we wish to wash our hands of this problem. Companies such as Turnitin seem to have come to the rescue, and have quickly become regarded as an “essential” part of academic life. Yet Turnitin is criticised by Dutch technology writer Hans de Zwart as a monopolist, since its onerous terms demand a “royalty-free, perpetual, worldwide, irrevocable license” to the students’ works. Furthermore, our students are given no genuine option to decline using it, creating a legally dubious form of coerced consent.

The reality is that we cannot beat determined cheats. As this arms race escalates, “study-hacking” tools will get smarter, cheaper and more widely available. Our attempts to identify, monitor and spy on our students will become more draconian and indefensible. As always, the losers in this technological crossfire will be our most honest students and most trusting professors.

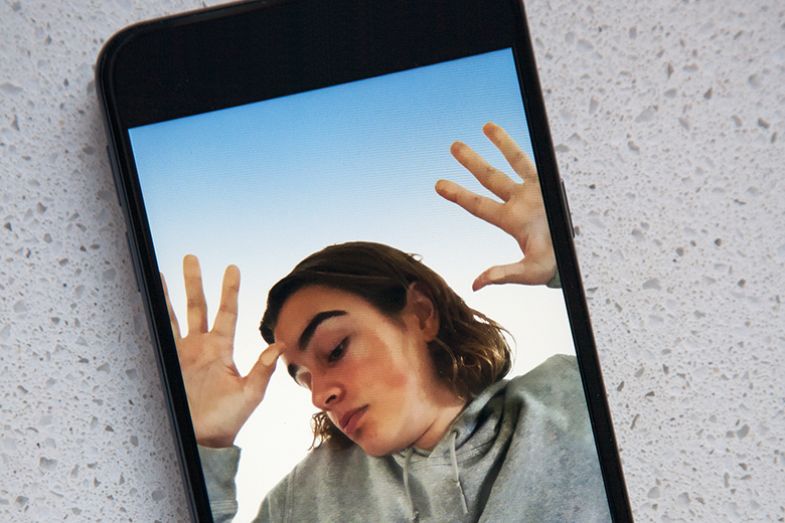

We can already see signs of what lies ahead. So-called exam proctoring software has been causing misery. With tests now taken at home, a new breed of companies, such as Respondus, are part of an emerging “exam surveillance industry”. Canada’s Wilfrid Laurier University and the City University of New York (CUNY) are just two institutions to have come under fire from students angry at attacks on their privacy and dignity when (threatened with failure for non-compliance) they are compelled to install privacy-invading applications on their computers.

To be absolutely clear, this is intimate, real-time bodily surveillance using AI facial and audio analysis. The software requires students to have a high-speed, reliable internet connection. They are made to perform a 360-degree camera scan of their room. AI identifies any “suspicious” objects, which must then be removed. A sensitive microphone constantly listens, snooping for “suspicious” noises. If students look away from the camera for too long, yawn, adjust their glasses or seem distracted – or even if, through no fault of their own, their internet falters or the lighting in the room changes too quickly – they fail.

It is hard to imagine a more intrusive and stress-provoking scenario. Students have likened it to a basement polygraph interrogation or the Ludovico technique from Anthony Burgess’ A Clockwork Orange (a form of aversion therapy in which drugged subjects are strapped into a chair with their eyes held open).

The spyware itself exhibits hallmarks of criminal malware. It disables essential functions on the student’s computer, including other defensive measures such as virus checkers, and can be difficult to remove. Many students cannot run it, causing arbitrary discrimination. It is also legally dubious, of course, on any number of privacy grounds.

Adopting such spyware represents a breakdown of trust between a university and its students. When I discussed it with some of them in a cyber-security seminar, they left me in no doubt about the threat to my profession. Asked what they would do if confronted with the prospect of such dignity-stripping intimate surveillance, “not becoming a student” was their top choice. (Many students have refused to install it in places where it was deployed in the US.) For my own part, as both teacher and computer security expert, I made it clear that I would not support or participate in the implementation of such technologies, and that I strongly advised any students to refuse them.

So what can be done to rehumanise teaching and avoid these nightmare scenarios?

Where professors can still make choices, we must urgently re-capture academic technology and reject Big Edu-Tech systems. We must favour products that use standardised, Libre open-source software or, even better, software developed within our own institutions. Reinvesting in the ICT department is essential to bring technological innovation back to the universities where it started and where it belongs. Technology must shape teaching according to our highest moral values, not just efficiencies. We must show our students trust and we must respect their privacy, dignity and self-determination.

We are likely to see many more scandals around intrusive educational spyware, data harvesting and outsourcing to Big Tech. But that also gives smart universities an opportunity to leverage unique new selling points: “digital dignity ratings”, for instance, could begin to replace grade point averages and sports facilities in student recruitment drives. Applications that normalise surveillance, cultivate blind compliance and teach young people to game systems should be chased out of our universities. I am convinced that institutions that value freedoms, open standards and integrity for students and staff will win the long game.

Perhaps there is also a new role for university ethics boards. These have been active in steering student research away from certain contentious areas, yet are largely silent on the policies of universities themselves. Let them start judging ICT procurement from suppliers that are convicted monopolists, flout data protection regulations or sell cyber-weapons to oppressive regimes. They might even want to warn us about using companies that directly compete with universities for market share.

The stakes are high. If we can rebuild trust with our students, restore pastoral, diverse ways of working and recreate faculty and ICT centres as places of innovation, then universities can survive against global tech giants. But if we don't adopt such reforms, universities won’t be worth saving anyway – because they will no longer be recognisable as universities.

Andy Farnell is a visiting and associate professor in signals, systems and cyber-security at a range of European universities. His latest book, Ethics for Hackers, will be published later this year.

后记

Print headline: We must avoid a digital dystopia